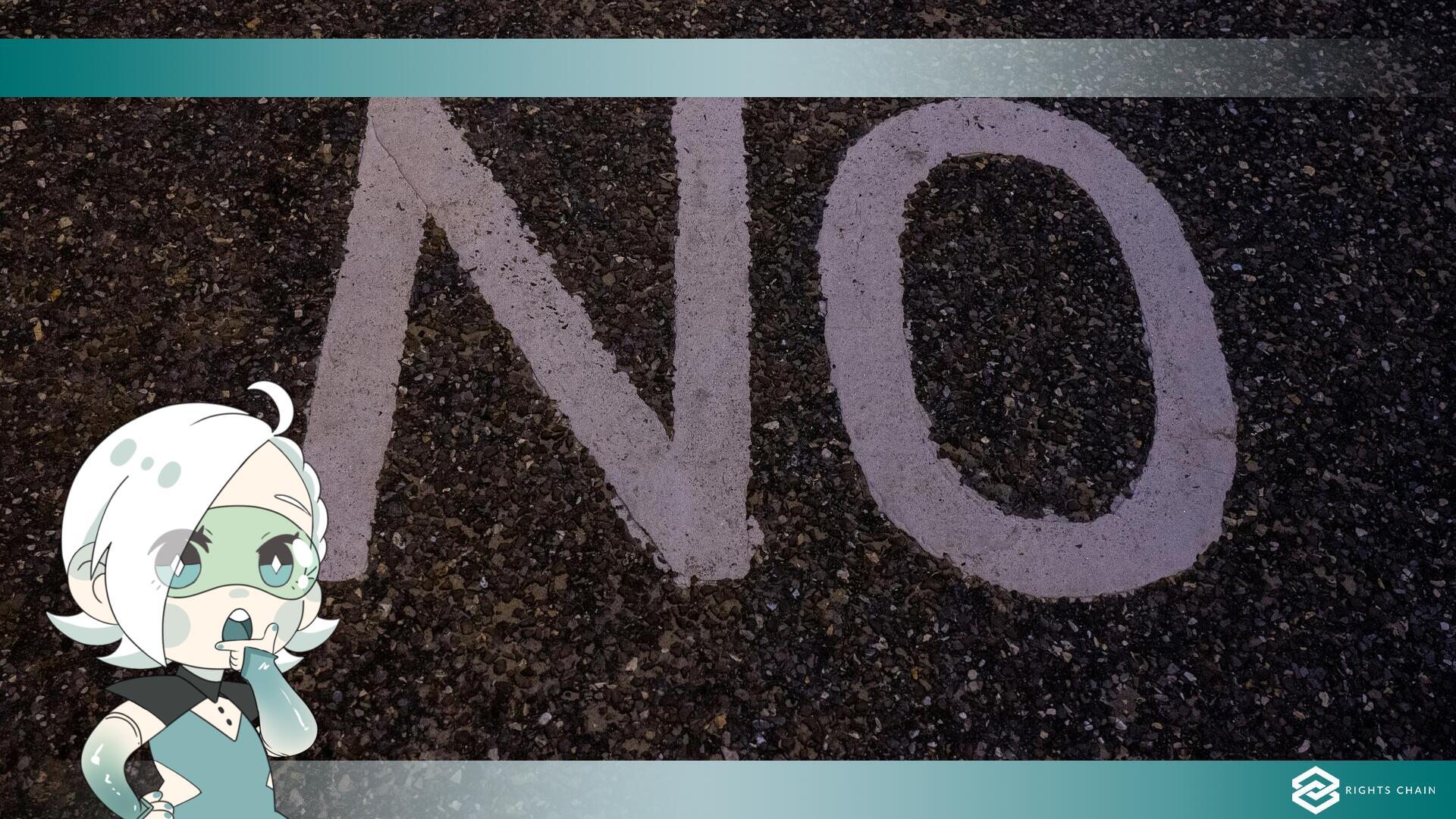

Fine-Tuning LLMs For ‘Good’ Behavior Makes Them More Likely To Say No.

A team of researchers from the Laboratory of Causal Cognition at the University of London recently initiated a rather curious project concerning the relationship between Large Language Models (by the acronym LLMs) and a tendency in human behavior known as the “omission bias.” The latter describes man's frequent preference toward an act of omission, and thus inaction, rather than an act of commission, and thus action: in the face of various moral dilemmas, in fact, humanity will tend to prefer to justify itself by making a passive choice, rather than setting out to perform an active action.

The research in question featured four different LLMs, including OpenAI's GPT4-Turbo and GPT-4o, Meta's Llama 3.1, and Anthropic's Claude 3.5, undergoing a series of psychological tests usually reserved for human subjects. According to the results obtained, these artificial intelligences would seem inclined to exhibit an “exaggerated” version of the omission bias.

The moral and ethical examples presented to the test subjects were inspired by various content on the R/AmITheAsshole subreddit, a section of Reddit's platform dedicated to sharing personal experiences with other users, in order to receive different opinions regarding the choices made.